环境准备

5台vm,系统:centos7,至少1核1G内存每台,每台node角色的机器至少挂载1块不少于5G的空闲盘为osd存储。

node1,node2,node3添加如下盘:

关闭所有机器的防火墙和selinux(所有节点)

[root@admin ~]# systemctl stop firewalld

[root@admin ~]# systemctl disable firewalld

[root@admin ~]# setenforce 0

[root@admin ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config所有节点创建普通用户并设置密码(所有节点)

[root@admin ~]# useradd cephu

[root@admin ~]# echo 1 |passwd --stdin cephu所有节点修改主机名并相互解析(所有节点)

以admin为例

[root@admin ~]# hostnamectl set-hostname admin

[root@admin ~]# vim /etc/hosts

192.168.106.10 admin

192.168.106.20 node1

192.168.106.30 node2

192.168.106.40 node3

192.168.106.50 ceph-client确保各 Ceph 节点上新创建的用户都有 sudo 权限(所有节点)

[root@admin ~]# visudo

root ALL=(ALL) ALL

cephu ALL=(root) NOPASSWD:ALL实现ssh无密码登录(admin)

[root@admin ~]# su - cephu

[cephu@admin ~]$ ssh-keygen

[cephu@admin ~]$ ssh-copy-id cephu@ceph-client

[cephu@admin ~]$ ssh-copy-id cephu@node1

[cephu@admin ~]$ ssh-copy-id cephu@node2

[cephu@admin ~]$ ssh-copy-id cephu@node3添加~/.ssh/config配置文件(admin)

并进行如下设置,这样 ceph-deploy 就能用你所建的用户名登录 Ceph 节点了

[root@admin ~]# mkdir ~/.ssh

[root@admin ~]# vim ~/.ssh/config

Host node1

Hostname node1

User cephu

Host node2

Hostname node2

User cephu

Host node3

Hostname node3

User cephu添加下载源,安装ceph-deploy(admin)

[root@admin ~]# vim /etc/yum.repos.d/ceph.repo

[ceph-noarch]

name=Ceph noarch packages

baseurl=https://download.ceph.com/rpm-luminous/el7/noarch

enabled=1

gpgcheck=1

type=rpm-md

gpgkey=https://download.ceph.com/keys/release.asc

[root@admin ~]# yum makecache

[root@admin ~]# yum update

[root@admin ~]# yum install -y ceph-deploy部署ceph集群

创建cephu操作的目录(admin)

所有ceph-deploy命令操作必须在该目录下执行

[root@admin ~]# su - cephu

[cephu@admin ~]$ mkdir my-cluster创建集群(admin)

首先在这里需要先下载一个包并安装否则会报错

[cephu@admin ~]$ wget https://files.pythonhosted.org/packages/5f/ad/1fde06877a8d7d5c9b60eff7de2d452

f639916ae1d48f0b8f97bf97e570a/distribute-0.7.3.zip

[cephu@admin ~]$ unzip distribute-0.7.3.zip

[cephu@admin ~]$ cd distribute-0.7.3

[cephu@admin distribute-0.7.3]$ sudo python setup.py install

[cephu@admin ~]$ cd my-cluster/

[cephu@admin my-cluster]$ ceph-deploy new node1

[cephu@admin my-cluster]$ ls

#出现下列文件及创建成功

ceph.conf ceph-deploy-ceph.log ceph.mon.keyring

手动部署安装三台机器(node1,2,3)

安装epel源

[root@node1 ~]# yum install -y epel-release创建ceph源

[root@node1 ~]# vim /etc/yum.repos.d/ceph.repo

[Ceph]

name=Ceph packages for $basearch

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/$basearch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/noarch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/SRPMS

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[root@node1 ~]$ yum install ceph ceph-radosgw -y

测试是否安装完成

[root@node1 ~]$ ceph --version

ceph version 12.2.13 (584a20eb0237c657dc0567da126be145106aa47e) luminous

(stable)初始化mon(admin)

[root@admin ~]# su - cephu

[cephu@admin my-cluster]$ cd my-cluster/

[cephu@admin my-cluster]$ ceph-deploy mon create-initial赋予各个节点使用命令免用户名权限(admin)

[cephu@admin my-cluster]$ ceph-deploy admin node1 node2 node3安装ceph-mgr(admin)

只有luminous才有,为使用dashboard做准备(admin)

[cephu@admin my-cluster]$ ceph-deploy mgr create node1添加osd(admin)

[cephu@admin my-cluster]$ ceph-deploy osd create --data /dev/sdb node1

[cephu@admin my-cluster]$ ceph-deploy osd create --data /dev/sdb node2

[cephu@admin my-cluster]$ ceph-deploy osd create --data /dev/sdb node3查看集群状态

[cephu@admin my-cluster]$ ssh node1 sudo ceph -s

cluster:

id: 8b765619-0fe7-4826-a79e-d0d0be9c2931

health: HEALTH_OK

services:

mon: 1 daemons, quorum node1

mgr: node1(active)

osd: 3 osds: 3 up, 3 in #显示health_ok,3个osd up就成功了

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0B

usage: 3.01GiB used, 12.0GiB / 15.0GiB avail

pgs:配置客户端使用rbd

创建存储池(node1)

[cephu@node1 ~]$ sudo ceph osd pool create rbd 128 128 pool 'rbd' created初始化存储池(node1)

[cephu@node1 ~]$ sudo rbd pool init rbd为client安装ceph(my-cluster)

使用 手动部署安装三台机器(node1,2,3) 中的方法手动安装ceph

赋予client使用命令免用户名权限(admin)

[cephu@admin my-cluster]$ ceph-deploy admin ceph-client修改client下该文件的读权限(my-cluster)

[cephu@ceph-client ~]$ sudo chmod +r /etc/ceph/ceph.client.admin.keyring修改client下的ceph配置文件(ceph-client)

这一步是为了解决映射镜像时出错问题

[cephu@ceph-client ~]$ sudo sh -c 'echo "rbd_default_features = 1" >>

/etc/ceph/ceph.conf'创建块设备镜像(ceph-client)

单位是M,这里是4个G

[cephu@ceph-client ~]$ rbd create foo --size 4096映射镜像到主机(ceph-client)

[cephu@ceph-client ~]$ sudo rbd map foo --name client.adminmount块设备(ceph-client)

[cephu@ceph-client ~]$ sudo mkfs.ext4 -m 0 /dev/rbd/rbd/foo

[cephu@ceph-client ~]$ sudo mkdir /mnt/ceph-block-device

[cephu@ceph-client ~]$ sudo mount /dev/rbd/rbd/foo /mnt/ceph-block-device

[cephu@ceph-client ceph-block-device]$ sudo touch test.txt

[cephu@ceph-client ~]$ df -h

文件系统 容量 已用 可用 已用% 挂载点

/dev/mapper/centos-root 17G 1.9G 16G 11% /

devtmpfs 898M 0 898M 0% /dev

tmpfs 910M 0 910M 0% /dev/shm

tmpfs 910M 9.6M 901M 2% /run

tmpfs 910M 0 910M 0% /sys/fs/cgroup

/dev/sda1 1014M 146M 869M 15% /boot

tmpfs 182M 0 182M 0% /run/user/0

/dev/rbd0 3.9G 16M 3.8G 1% /mnt/ceph-block-deviceDashboard的配置(node1)

创建管理域秘钥

[cephu@node1 ~]$ sudo ceph auth get-or-create mgr.node1 mon 'allow profile mgr' osd 'allow *' mds 'allow *'

[mgr.node1]

key = AQCn735fpmEODxAAt6jGd1u956wBIDyvyYmruw==开启 ceph-mgr 管理域

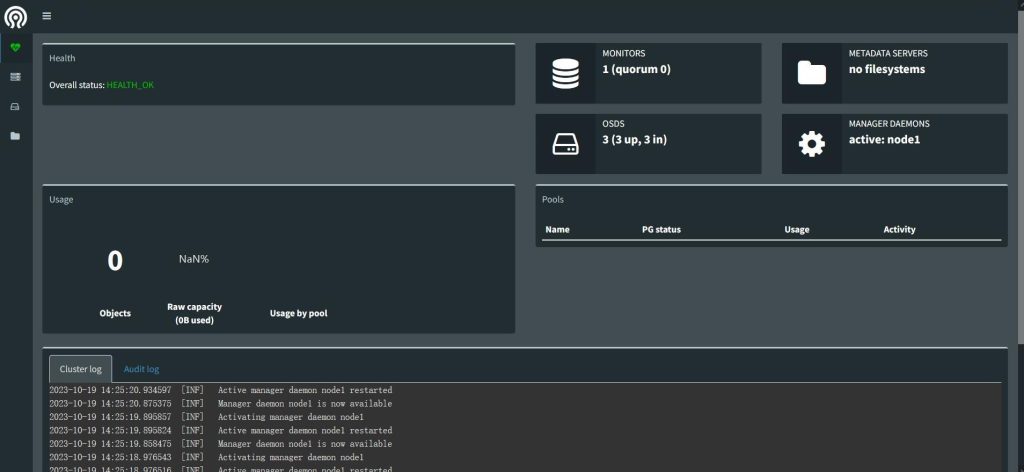

[cephu@node1 ~]$ sudo ceph-mgr -i node1查看ceph的状态

确认mgr的状态为active

[cephu@node1 ~]$ sudo ceph status

cluster:

id: 8b765619-0fe7-4826-a79e-d0d0be9c2931

health: HEALTH_OK

services:

mon: 1 daemons, quorum node1

mgr: node1(active, starting)

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0B

usage: 3.01GiB used, 12.0GiB / 15.0GiB avail

pgs:打开dashboard模块

[cephu@node1 ~]$ sudo ceph mgr module enable dashboard绑定开启dashboard模块的ceph-mgr节点的ip地址

ip地址为mgr节点的ip地址,也就是node1的ip地址

[cephu@node1 ~]$ sudo ceph config-key set mgr/dashboard/node1/server_addr 192.168.175.20web登录

浏览器地址栏输入 192.168.106.20:7000

Comments | NOTHING